Dr. K. L. Mittal, Dr. Robert H. Lacombe

The previous issue of the “SURFACES: THE INVISIBLE UNIVERSE” blog focused on the topic of polymer surface modification. In this issue we continue on this topic and would again like to remind the reader of the upcoming Tenth International Symposium on Polymer Surface Modification: Relevance to Adhesion, to be held at the University of Maine, Orono, June 22-24 (2015). All readers are cordially invited to join the symposium either to present a paper on their current work in this field or to simply attend and greatly expand their awareness of current developments. Further details are available on the conference web site at: www.mstconf.com/surfmod10.htm

PLASMA CHEMISTRY OF POLYMER SURFACES

We continue our discussion on the topic of polymer surface modification via a book on this most important subject:

The Plasma Chemistry of Polymer Surfaces: Advanced Techniques for Surface Design, by Jörg Friedrich (WILEY-CVH Verlag GmbH& Co. KgaA, 2012)

The author began his studies in Macromolecular Chemistry at the German Academy of Sciences in Berlin and has been active in this field ever since and is now professor at the Technical University of Berlin. He is best know to us through his past participation in previous gatherings of the above mentioned Polymer Surface Modification symposium series going back to 1993.

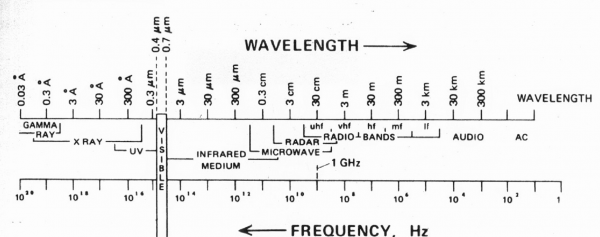

In his introduction Prof. Friedrich points out the apparently incredible fact that more than 99% of all visible matter is in the plasma state. A moments reflection, however, easily confirms this statement since the Sun above, which of itself accounts for more than 99% of all matter in the solar system, is in fact an exceedingly dense and hot ball of plasma. Here on earth the plasma state is rarely observed outside of special devices and consists mainly of low and atmospheric pressure plasmas which are a source of moderate quantities of energy mainly transferred through the kinetic energy of free electrons. Such plasmas have sufficient energy to produce reactive species and photons which are able to initiate all types of polymerizations or activate the surface of normally inactive polymers. Thus plasmas offer the opportunity to promote chemical reactions at surfaces which would otherwise be difficult to achieve. However, the very active nature of plasma systems also present a problem in that the broadly distributed energies in the plasma can also initiate a wide range of unwanted reactions including polymer chain scission and cross linking. The problem now becomes how does one tame the plasma into performing only the chemical reactions one desires by eliminating unwanted and destructive processes. This is the topic to which we will give more attention to shortly but first a quick look at the contents of the volume.

The volume is divided into 12 separate chapters as follows:

- Introduction

- Interaction Between Plasma and Polymers

- Plasma

- Chemistry and Energetics in Classic and Plasma Processes

- Kinetics of Polymer Surface Modification

- Bulk, Ablative and Side Reactions

- Metallization of Plasma-Modified Polymers

- Accelerated Plasma-Aging of Polymers

- Polymer Surface Modifications with Monosort Functional Groups

- Atmospheric-Pressure Plasmas

- Plasma Polymerization

- Pulsed-Plasma Polymerization

Given the above list I think it can be fairly said that the volume covers the entire range of surface chemistries associated with plasma processes and far more topics than can be adequately addressed in this review. Thus the remainder of this column will focus on the above outlined problem of controlling the surface chemistry by taming normally indiscreet plasma reactions. This problem is discussed in chapters 9 and 12 of Prof. Friedrich’s book.

Chapter 9 attacks the problem of controlling an otherwise unruly surface chemistry initiated by aggressive plasma reactions through the use of “Monosort Functional” groups. For the benefit of the uninitiated we give a short tutorial on the concept of functional group in organic chemistry. The term functional group arises from classic organic chemistry and typically refers to chemical species which engage in well known chemical reactions. The classic example refers to chemical species attached to hydrocarbon chains. As is well known the hydrocarbons form a series of molecules composed solely of carbon and hydrogen. The simplest which is methane or natural gas which is simply one carbon atom with 4 hydrogens attached in a tetrahedral geometry and commonly symbolized as CH4. The chemistry of carbon allows it to form strings of indefinite length and in the hydrocarbon series each carbon is attached to two other carbons and two hydrogens except for the terminal carbons which attach to one other carbon and 3 hydrogens. Thus moving up the series we get to the chain with 8 carbons called octane which is the basic component of the gasoline which powers nearly all motor vehicles. Octane is a string of 8 carbons with 6 in the interior and two on the ends of the chain. The interior carbons carry two hydrogens and the two end carbons carry 3 hydrogens each giving a total of 18 hydrogens. Octane is thus designated as C8H18. Moving on to indefinitely large chain lengths we arrive at polyethylene which is a common thermo-plastic material used in fabricating all varieties of plastic containers such as tupper ware® , plastic sheeting and wire insulation. Outside of being quite flammable the low molecular weight hydrocarbons have a rather boring chemistry in that they react only sluggishly with other molecules. However, if so called functional groups are introduced the chemistry becomes much more interesting. Take the case of ethane C2H6 the second molecule in the series which is a gas similar to methane only roughly twice as heavy. If we replace one of the hydrogens with what is called the hydroxyl functional group designated as -O-H which is essentially a fragment of a water molecule, ie H-O-H with one H lopped off, we get the molecule C2OH6 which now has dramatically different properties. Ethane the non water soluble gas becomes ethanol a highly water soluble liquid also known as grain alcohol and much better known as the active ingredient in all intoxicating beverages. Thus through the use of functional groups chemists can work nearly miraculous changes in the properties of common materials and Prof. Friedrich’s monosort functionalization is a process for using plasmas to perform this bit of magic on polymer surfaces by attaching the appropriate functional groups. The process can be rather tricky, however, and requires understanding of the physical processes involved at the atomic and molecular level.

The following example illustrates the nature of the problem and how successful functionalization can be carried out using plasma technology.

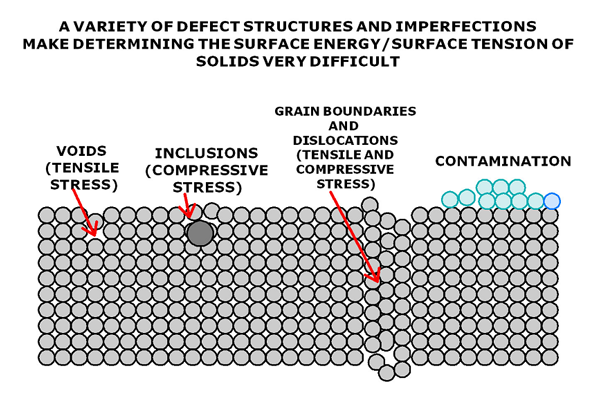

Figure (1a) illustrates the basic problem with most common plasma surface treatments. The exceedingly high energy associated with the ionization of oxygen coupled with the equally high energies associated with the tail of the electron energy distribution give rise to a panoply of functional groups plus free radicals that can give rise to degradation and crosslinking in the underlying polymer substrate. Thus it would be difficult to control the chemical behavior of the nonspecific functionalized surface shown in Fig.(1a) with regard to further chemical treatment such as the grafting on of a desired molecule. In essence the wide range of chemically reactive entities make it very difficult to control any further chemical treatment of the surface due to the presence of a wide range of reactive species with widely different chemical behaviors.

Prof. Friedrich points out that unfortunately most plasma gasses behave as shown in Fig.(1a) but somewhat surprisingly use of Bromine (symbol Br) is different due to a special set of circumstances related to the thermodynamic behavior of this molecule, which are too technical to go into in this discussion. It turns out that bromine plasmas can be controlled to give a uniform functionalization of the polymer surface as shown in Fig.(1b). The now uniformly functionalized surface can be subjected to further chemical treatment such as grafting of specific molecules to give a desired well controlled surface chemistry.

In a similar vein, in chapter 12 Prof. Friedrich approaches the problem of plasma polymerization through the use of pulsed as opposed to continuous plasma methods. The problem is much the same as with the surface functionalization problem discussed above. Continuous plasmas involve a steady flux of energy which gives rise to unwanted reactions whereas by turning the energy field on and off in a carefully controlled manner limits the amount of excess energy dumped into the system and thus also the unwanted side reactions.

As this blog is already getting too long we leave it to the interested reader to explore the details by consulting Prof. Friedrich’s volume. As usual the author welcomes any further comments or inquiries concerning this topic and may be readily contacted at the coordinates below.

Dr. Robert H. Lacombe, Chairman

Materials Science and Technology CONFERENCES

3 Hammer Drive, Hopewell Junction, NY 12533-6124

Tel. 845-897-1654, FAX 212-656-1016; E-mail: rhlacombe@compuserve.com

Recommend

Write a comment